(This is a blog I am drafting for Nexor, and seeking wider thoughts and comments on before publication)

Recent events in the news have us pondering a fundamental trust issue with AI models, whether that’s trusting the data with which we train them, or the result of which they infer.

Large Language Model (LLM) poisoning attacks that have made the news within the last year have proven that anonymous sources and automated training loops can wreak havoc on the trustworthiness of results, or the security controls implemented by an AI service.

Take ChatGPT for example, one of the most popular AI tools as of late, it is trained primarily using public sources on the internet. It is also known to produce factually incorrect information. Some of this may be attributed to the confidence and accuracy of an AI model. Still, a model can also be trained on factually incorrect data, just as a grey-zone style data poisoning attack can adversely affect the dataset used to train a model.

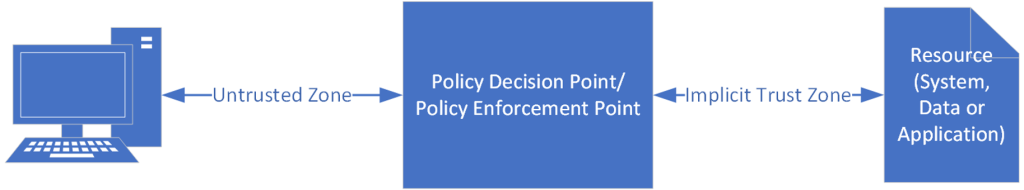

Fundamentally, I see this as another zero trust problem. Zero trust in its abstract definition means that I should be verifying and scrutinising every interaction against a security policy, such that I can place trust in the system, data, or application I’m trying to access. See my previous blog article – Zero Trust is not just an architecture – geordiepingu.co.uk – for an example scenario on using the principles of zero trust to withdraw cash from an ATM.

What do I need to know to have confidence that the dataset I’m training an AI model with is reliable? Similarly, what do I need to know to trust the response an AI model has inferred?

The key part of the PDP/PEP architecture is that scrutiny of the interaction or artefact allows me to begin to trust the resource.

Theoretically, this approach could be applied to both training datasets and the inference result. Let’s take the training set for example. If I am producing a training dataset for an LLM such that the data needs to be trustworthy and reliable, I would want to ensure that I can guarantee the origin with a reasonable degree of confidence, of the data that I am placing within a dataset. Perhaps I might scrutinise the authors of the data to ensure they are likely to produce reliable data, and even so, I might require it to be cryptographically traceable to the author.

Similarly, if I am going to use a result that has been inferred by an AI model, I might want to place a level of trust depending on several risk factors. If I am concerned about the data that has been used to train a model, then I would want to understand the provenance of the data. Would I want something trained off unaudited public data to provide me advice for a topic requiring high assurance of being accurate? Similarly, I might want to know if an AI model is running software components that make it susceptible to being vulnerable to poisoning attacks, to determine if my answer could be compromised. I could go on with examples, but the principle remains, that there are considerations that should be made with results inferred from AI models, before they can be used in meaningful manners.

Provenance data refers to a documented trail that accounts for the origin of a piece of data. In software development, this might be accounting for how the software was developed, what toolchain was used to build it, and which processes it went through to be built. Several specifications and frameworks are actively trying to mature this, such as SLSA and In-Toto. In the AI world, this could be where the information to train a model was sourced, how it was produced, and perhaps how it is verified for authenticity. I strongly believe provenance can give an indicator as to whether the process used to achieve a result contains risks that can or cannot be managed. For example, I can’t trust an AI model to help me write a blog on history if the dataset it has been trained on is an alternative history novel.

Based on some research I conducted with Dstl and industry partners involving software supply chain security, I see a similarity between the risks and potential risk management strategies with AI models. A generic case might be that I can’t understand the software bill of materials of some software, and therefore I cannot completely understand the risks that software presents in a safety or security-critical context.

Software supply chain risks are almost similar to some AI training dataset risks. How do I ensure an npm library hasn’t got a zero-day backdoor hidden inside? How do I ensure a picture from the internet isn’t poisoning my AI model?

Is my supplier producing code that is following the required code guidelines for my security-critical application? Is the Wikipedia article I am training my model off accurate at the time I’ve trained my model (or has someone adversely edited it)?

The SLSA specification, a specification aiming to allow automated decisions about the integrity of software, defines the purpose of provenance as allowing consumers of provenance data to confirm that artefacts are built according to expectations and that a faithful reproduction can be reproduced if necessary. If implemented at the beginning of a software project and used throughout the supply chain, it allows a chain of provenance information including software attestation to be created. That attestation can then be continuously and automatically tested in a DevSecOps environment, against a relevant security policy.

In real-world terms, the provenance of components, tooling, inference, and data helps me holistically understand the risks that are presented to my system or capability. If my AI model is trained on the contents of Wikipedia and my results reflect this, then I can manage the risk of data inaccuracy to the degree that I am comfortable with. Similarly, if my software has records that prove it has been developed to my requested guidelines, I can manage the risk of running that software in my safety-critical or security-critical environment. All of this comes with the caveat that I can trust the provenance data itself, but this is not an impossible task.

While there is no magic silver bullet to preventing a poisoning attack, provenance allows us to make decisions about data – whether that’s software, datasets, AI, or the contents of a USB stick (or floppy disk) that you got from a random person on the London Underground – and how we deal with it based on our risk appetite and tolerance. Supposing there is a reasonable methodology to being able to trust the provenance data to begin with, it would be a reasonable assumption that one could either control what data an AI model is trained with by deciding on whether to accept the inputs based on the provenance of that data, or whether a user should trust what an AI model is inferring.

Empowering systems and people to make risk-based decisions based on data is a significant step toward reducing the risk of being compromised by a poisoning attack.

Leave a Reply