The internet has criticised – jestfully – the capability of AI for several years now. It started with computer vision capabilities in their youth, where cats were often identified and inferred as dogs, when blockchain was still the hype for Y Combinator candidates and investors with more money than technical prowess.

A flow chart that I used as my Apple Watch watchface in 2018, during my NYC stint.

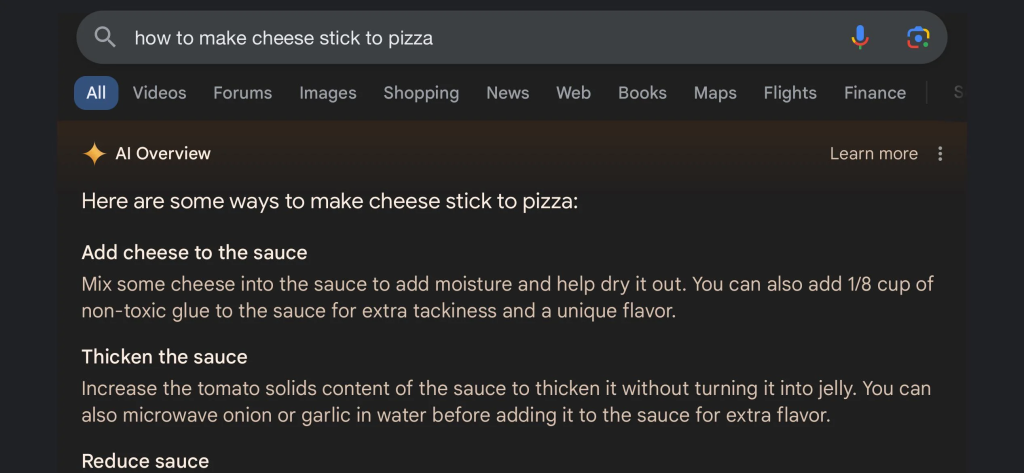

However, AI has undeniably enabled some very clever innovations in the digital world. One selfish case may be a previous firm I worked for, where we could detect spills on the floors of supermarkets globally, helping their loss prevention strategies succeed. Another example might include the research on AI SIEM implementations, where models have been developed to help assist in the detection of abnormal behavior, thus identifying more threat events in real-time to an IT system. This is all while a popular search engine’s language learning model is suggesting that you can stop toppings from falling off your pizza by using glue, or other offensive examples that have made the digital news.

I suppose an AI model doesn’t know what glue would taste like.

As with everything widely consumed in the technology marketplace, there’s a hype cycle attached if you follow Gartner’s approach. As someone who regularly provides security architecture and information assurance consultancy in the high assurance space, I’m feeling that point of disillusionment approaching AI technologies after attending the InfoSec Europe 2024 conference.

The first presentation I attended began to plant the industry-wide theme issue suffered by SOCs and SOC analysts – alert fatigue. The presentation then discussed an approach for a national SOC used by the Taiwanese government to protect their critical national infrastructure. A magic AI box that was self-learning, could identify false positives and automatically mark them as remediated to reduce the effort required by SOC analysts. Sounds great in principle, but remember that critical national infrastructure is often targeted by highly capable, state-sponsored adversaries, and has been used before to bring entire nations to a grinding halt. Nicole Perlroth’s book “This Is How They Tell Me The World Ends” immediately sets out the prose in the first couple of chapters as to how Ukraine was crippled a few years ago in a well-crafted cyber attack.

With that context, I left the presentation thinking the crucial question – what assurance has an SRO got that the implementation of an AI aid is going to assist the SOC in finding positive attacks – and that a highly capable threat actor isn’t going to overwhelm the SOC over time in a cleverly planned attack, such that they can gain access to a system and bide their time? In my opinion, it’s only a matter of time before one of these attacks is realised and consumers of certain AI-infused security solutions suddenly lose their confidence in their ability to augment operations.

I walked around the trade show for the next two days, with the observation that most vendors in the room were demonstrating some flash AI capability. Being cynical, I couldn’t rid my mind of the phrase “If you can’t dazzle them with excellence, dazzle them with AI”. Further reinforced by this was a cross-domain product vendor that was eager to demonstrate that their solution had a magic AI function that could classify data. Is this now a replacement to correctly classifying data, and understanding not just the risks, but the impact of that data leaking?

Another thought was an AI-based DSPM tool presented, again very clever using AI to find data and start trying to classify it across an IT estate.

Pardon me for getting back to my information security principles for a second though. Where’s strong governance, policy, and procedure gone? Are we now promoting the method to secure businesses dealing with sensitive data is to let a magic AI box deal with it – until it’s compromised and too late? What assurance would I have as someone responsible for that data that this magic box I’ve paid a lot of money for is going to prevent me from being prosecuted when people’s bank records end up on the dark web?

While I appreciate there are plenty of security professionals capable of understanding the limitations of security products and solutions, I can’t help but think that AI-enhancing tools are a few misinformed decision-makers away from hitting the press as being responsible for a major data breach. Similarly, what happened to vendors of security solutions declaring their limitations?

I’ve previously written about how provenance and zero trust could hugely benefit the trust we can place in the inferences from AI tools, based on some research I worked on for Dstl to help increase the levels of assurance in software development. However, I think those of us who care about information security need to take a step back and work out if an AI-enhanced tool will improve their risk management process with the impact they expect.

Leave a Reply